How to Set Up and Configure Elk Stack for Effective Log Management

Introduction to Elk Stack and its components;

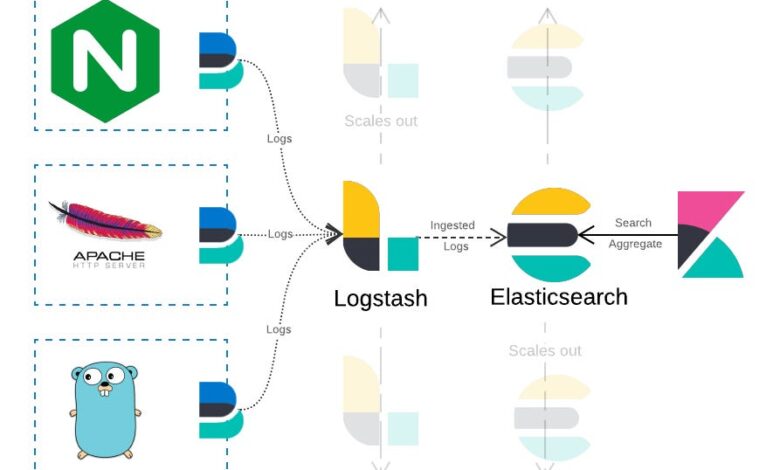

Elk Stack is a powerful open-source log management tool that helps businesses and organizations effectively collect, analyze, and visualize large amounts of data from various sources in real time. It consists of three main components – Elasticsearch, Logstash, and Kibana – which work together to provide a comprehensive solution for managing and monitoring logs.

1. Elasticsearch: Elasticsearch is an open-source search engine that stores and indexes the collected log data. It uses Apache Lucene under the hood to enable fast searching, aggregations, and analytics on the data. This distributed database is highly scalable, fault-tolerant, and can handle large volumes of data in near real-time. With its RESTful API interface, Elasticsearch allows easy integration with other systems for seamless data retrieval.

2. Logstash: Logstash is an open-source server-side data processing pipeline that collects logs from various sources, such as files, databases, network protocols, messaging queues, etc., and sends them to Elasticsearch for indexing. It also performs parsing or transformation of the log data before sending it to Elasticsearch. This component supports over 200 plugins, highly configurable according to different use cases.

3. Kibana: Kibana is a web-based visualization tool used for exploring and analyzing the indexed log data in Elasticsearch through interactive dashboards and charts. Its user-friendly interface allows users without technical knowledge to create visualizations based on their requirements using drag-and-drop functionalities quickly. It also offers

Understanding the importance of effective log management;

Effective log management is crucial for any organization or business, regardless of size or industry. Logs are a fundamental aspect of any system, as they provide valuable insights into the health, performance, and security of an application or infrastructure. However, many organizations need to pay more attention to the importance of proper log management and realize its significance once they face a critical issue.

In this section, we will delve deeper into understanding why effective log management is essential and how it can benefit your organization.

1. Identify and troubleshoot issues

Logs are a vital troubleshooting tool for identifying errors, bugs, or other issues within a system. By collecting all the logs in one central location, such as Elk Stack, you can quickly pinpoint the source of the problem and resolve it before it escalates into a more significant issue. With real-time monitoring capabilities provided by Elk Stack, administrators can proactively monitor their systems and detect anomalies that could cause problems.

2. Improve system performance

Logs contain valuable information about the performance of different components within a system. By analyzing these logs with tools like Elk Stack’s Logstash module, you can identify any bottlenecks or inefficiencies hindering your system’s optimal performance. This insight allows you to make necessary changes to optimize your system’s performance.

3. Ensure security and compliance

Effective log management plays a crucial role in maintaining the security of your systems and ensuring compliance with regulatory standards like HIPAA or GDPR.

Step-by-step guide to setting up Elk Stack on a server:

1. Choose and prepare your server:

The first step in setting up Elk Stack is to choose a suitable server. This can be a physical server or a virtual machine, depending on your requirements. Ensure the server has enough resources, such as RAM, CPU, and storage space, to handle the incoming logs.

2. Install necessary dependencies:

Before installing Elk Stack, ensure all the necessary dependencies are installed on your server. These include Java 8 or higher, Python 2 or 3, and any other libraries required by Elasticsearch.

3. Download and install Elasticsearch:

Elasticsearch is the core component of Elk Stack responsible for storing and indexing logs. To download it, visit the official Elasticsearch website and choose the appropriate version for your operating system. Once downloaded, follow the installation instructions provided by Elasticsearch.

4. Configure Elasticsearch:

After installation, you must make some basic configurations in Elasticsearch before starting it up. These include specifying data directories and network settings. You can also configure advanced settings such as cluster names and node roles at this stage.

5. Install Logstash:

Logstash is responsible for collecting logs from various sources and sending them to Elasticsearch for indexing. To install Logstash, download it from its official website and follow the installation instructions provided.

6. Configure Logstash pipelines:

Once Logstash is installed, you must configure pipelines for each source of logs you want to collect.

Best practices for optimizing Elk Stack for log management;

To effectively manage and analyze your logs using Elk Stack, certain best practices can be followed to optimize its performance. These best practices not only enhance the efficiency of your log management system but also ensure that you get accurate and actionable insights from your log data.

- Before setting up Elk Stack, it is crucial to plan how you want to collect and send logs to the system. This includes deciding which logs should be collected, where they will be stored, and how frequently they will be sent. Proper planning helps avoid unnecessary strain on the system and ensures that essential logs are noticed.

- Filebeat is responsible for collecting, parsing, and shipping log files to Logstash or Elasticsearch. It is essential to configure Filebeat correctly for optimal performance. Some best practices for Filebeat configuration include using multiline patterns for multiline logs, specifying a custom delimiter for CSV files, and setting up filters to exclude irrelevant logs.

- Logstash filters play a vital role in processing incoming log data by transforming it into a structured format before storing it in Elasticsearch. To avoid any bottlenecks in processing large volumes of logs, it is recommended to use lightweight conditional statements rather than complex ones while configuring filters.

- Elasticsearch uses indices for storing log data efficiently and enabling fast retrieval during searches. It is essential to configure them appropriately as per the volume and frequency.

Troubleshooting common issues and errors in Elk Stack;

One of the main advantages of using Elk Stack for log management is its ability to collect, process, and analyze large volumes of data in real time. However, like any other software, it is not immune to issues and errors that may occur during the setup or usage. In this section, we will discuss some common problems that users may encounter while using Elk Stack and how to troubleshoot them effectively.

1. Elasticsearch Connection Error:

One of the most common issues users face when setting up Elk Stack is a connection error with Elasticsearch. This could be due to various reasons, such as incorrect configuration settings or network connectivity issues between Logstash and Elasticsearch. To troubleshoot this issue, check if the Elasticsearch service is running on your server and ensure you have configured the correct host and port in your Logstash configuration file. If the issue persists, try restarting both Logstash and Elasticsearch services.

2. Kibana Dashboard Not Loading:

If you cannot access your Kibana dashboard after setting up Elk Stack, it could be due to a misconfiguration in your NGINX proxy settings or incorrect permissions on Kibana files. Check your NGINX configuration file for any errors and ensure that it points to the correct location of your Kibana installation directory. Also, ensure that the user account running the NGINX service has sufficient permissions on all Kibana files.

Real-world examples and use cases of Elk Stack in action;

Elk Stack is a powerful and versatile log management tool that has gained immense popularity in recent years. It is a combination of three open-source tools – Elasticsearch, Logstash, and Kibana, which work together to provide a comprehensive solution for collecting, storing, and visualizing vast amounts of data.

But how does Elk Stack work in the real world? In this section, we will explore some everyday use cases and examples of organizations using Elk Stack to gain valuable insights from their logs.

One of the most common use cases for Elk Stack is monitoring server logs. With the help of Logstash, all incoming server logs can be collected in one central location (Elasticsearch) where they are indexed and stored for easy retrieval. This allows system administrators to monitor server performance in real time and quickly identify any issues or anomalies that may arise.

For example, a company’s IT team can use Elk Stack to monitor their web servers’ access logs to track user activity on their website. They can then use Kibana’s visualizations to analyze user behavior patterns and detect suspicious activity, such as brute-force attacks or unauthorized access attempts.

Conclusion:

In this article, we have discussed the importance of setting up and configuring an Elk Stack for effective log management. Now, let’s summarize the main benefits of implementing this powerful tool in your organization.

One of the most significant advantages of using Elk Stack is its ability to centralize all your logs into one location. You no longer need to access multiple systems or servers to gather essential log data. With Elk Stack, all your logs are collected and stored in a centralized location, making it easier for you to monitor and analyze them.

Elk Stack allows you to monitor your logs in real-time, providing instant insights into any potential issues or anomalies within your system. This helps you proactively identify and address problems before they escalate, ensuring smooth operations and minimizing downtime.

With all your logs in one place and real-time monitoring capabilities, troubleshooting becomes much faster and more efficient with Elk Stack. You can easily search through large volumes of data, filter by various parameters, and pinpoint the root cause of any issue within minutes.