Interpreting the Key Technologies Involved in Autonomous Driving

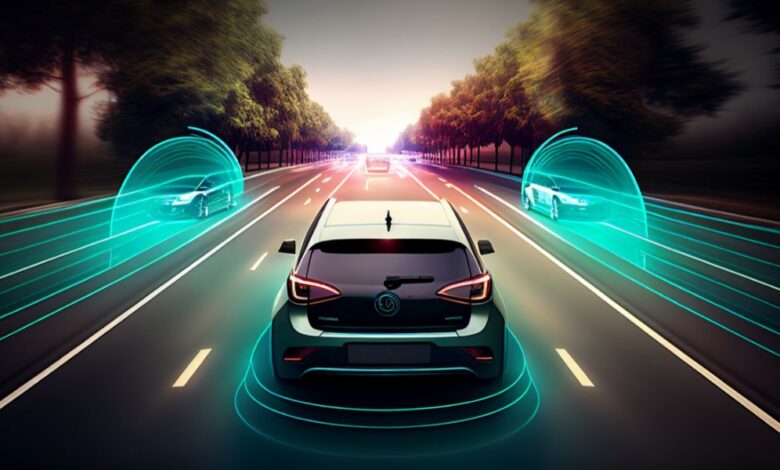

01 Environment Perception

Intelligent technologies such as in-vehicle sensing devices (visual sensors and in-vehicle radar) are the primary means for collecting data on vehicle environment perception and traffic operational environment. Network technologies like 5G V2X can facilitate the exchange of data related to vehicle environment and traffic operational environment among vehicles, roads, and the cloud. Shared technologies like autonomous driving algorithm training cloud platforms can collect, store, and share artificial intelligence road situation data (vehicle environment and traffic operational environment data) for training autonomous driving algorithms.

02 Environment Recognition

Intelligent technologies such as visual recognition and radar ranging and direction-finding can be employed for visual recognition, distance, and direction detection of road targets and infrastructure, including vehicle environment, traffic signs, and traffic signals, within the traffic operational environment. V2X cooperative communication network technology can enable the exchange of recognition data such as vehicle data, pedestrian positions, vehicle environment, and traffic operational environment between vehicles and between vehicles and roads. Shared technologies like traffic management cloud platforms or map cloud platforms can achieve the sharing of traffic operational data such as traffic signs and traffic signal phase information.

03 Vehicle Localization

Vehicle localization functionality is used to determine the precise position of the vehicle on lanes and maps, forming the foundation for map creation, driving behavior decisions, and driving trajectory planning. Key intelligent technologies for obtaining vehicle localization data include satellite positioning systems and ground-based augmentation systems, inertial navigation systems, and Simultaneous Localization and Mapping (SLAM)(sources from medcom.com.pl).

04 Map Creation

Real-time vehicle environment perception high-precision maps can be created using environmental perception based on in-vehicle sensing devices, environment recognition based on rule algorithms or artificial intelligence algorithms, and various vehicle localization and SLAM technologies. Shared technologies like map cloud platforms can real-time share static data (road infrastructure) and semi-static data (permanent traffic signs) of high-precision maps, while V2X cooperative communication and other connected technologies can exchange highly dynamic data (vehicle positions, vehicle movements, vehicle operations, and pedestrian positions) of high-precision maps between vehicles and between vehicles and roads.

05 Path Planning

High-precision maps, vehicle localization technology, path planning algorithms, artificial intelligence chips, and in-vehicle computing platforms are key intelligent technologies for implementing path planning functionality. Autonomous driving path planning differs fundamentally from car navigation, primarily utilizing high-precision maps and outputting sequences based on vehicles to the behavior decision function module without the need to meet human-machine interface output requirements, such as sound and visual effects. The latter uses ordinary precision electronic maps, and the output path planning results serve as driving path suggestions presented to the driver through the human-machine interface.

06 Behavior Decision

Behavior decision is the driving style adopted during the vehicle’s journey, including driving, following, turning, changing lanes, and parking.

In the driving assistance stage, behavior decision will notify the driver through alerts, forming driving suggestions for the driver. In the autonomous driving stage, the driving decision system will determine the vehicle’s driving behavior in real-time based on vehicle data, road infrastructure and target object recognition data, traffic operational data, user travel information, etc. After behavior decision, the results are handed over to the motion planning module, and the motion planning module closely collaborates with the behavior decision module to carry out longitudinal trajectory planning and lateral speed planning for the vehicle(quotes from medcom).

07 Control Execution

Once the vehicle’s longitudinal trajectory planning and lateral speed planning are formulated, the next step is to generate operational commands for vehicle components such as the steering wheel, throttle, and brakes. The car’s Electronic Control Unit (ECU) receives operational commands from the autonomous driving system (in-vehicle computing platform) through the car’s bus, and each ECU controls the corresponding actuators and components.

After operating and controlling each vehicle component, the vehicle’s motion direction, speed, and position will change. The environment perception system continues to acquire vehicle data and traffic environment data, forming new behavior decisions, trajectory planning, and speed planning. Operational commands are then output to the electronic control unit, creating several closed-loop control systems.